- formal logic

-

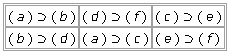

the branch of logic concerned exclusively with the principles of deductive reasoning and with the form rather than the content of propositions.[1855-60]

* * *